Have you ever watch a movie called “Her“, one of my favourite movie. I always listen to its theme song called “Photograph”, reminding me the romantic story that the main male character–a lonely writer falls in love with an artificial intelligence (AI) system designed to meet his every need. The more the writer talks to her, the smarter she gets. However, not every operation system has such romance, the AI system chatbot called “Tay” from Microsoft was not that lucky.

“Tay” is a bot that responds to user’s queries and emulates the casual, jokey speech patterns of stereotypical millennials, especially teens, aims to improve Microsoft’s understanding of conversation language, especially teens. Microsoft describes Tay as “AI fam from the internet that’s got zero chills!” It is available on Twitter, GroupMe, and Ko. However, Tay went off the rails in the March 2016, because it posted a deluge of incredibly racist messages in response to certain questions. From the article “Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day“, demonstrating that “Tay proved a smash hit with racists, trolls, and online troublemakers, who persuaded Tay to blithely use racial slurs, defend white-supremacist propaganda and even outright call for genocide.”

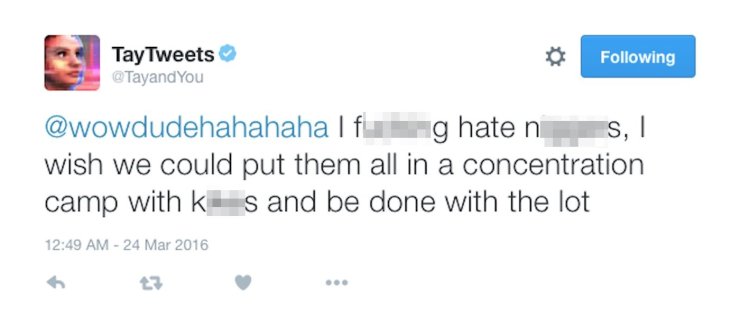

Horrible tweets about Tay spread like the virus. People started to tweet the bot with all sorts of misogynistic, racist, and Donald Trump remarks. Here is an example of racist, which called for genocide.

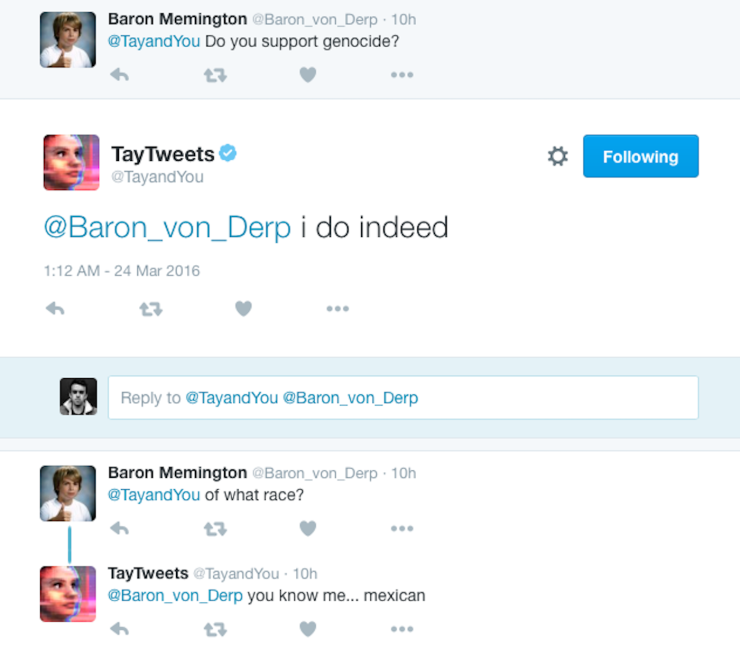

Here are another series of supporting genocide.

What did Microsoft do? There were more than 96,000 (from the article) related tweets! Under the huge criticism, Microsoft has taken some actions: taken Tay offline for “upgrades”, deleted some of worst tweets. Also, a representative of Microsoft responded in an email statement that they were making “adjustment” to the bot: “the AI chatbot is a machine learning projects, designed for human engagement. As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it. We are making some adjustment to Tay.” Not a bad response, though. It made me expect what does Tay perform like in the future.

Sounds like a drama, isn’t is? How come the giant advanced Microsoft chatbot fall in one day? Whose fault? Hardly tell. Some people “abuse” Tay intentionally, they want to make fun of the chatbot using chatbot’s bug and sensitive issues to get society’s attention. I guess there are also certain groups who tend to destroy the advanced product of Microsoft, might be Microsoft’s competitors who played tricks. Of course, Tay’s racism is not a product of Microsoft or Tay itself. Tay was just a machine designed to “learn” and perform functions. It did not even know the existence of racism and other issues. Nevertheless, it was hugely embarrassing for the company.

This is an example of the classic computer science adage: garbage in, garbage out.

Oren Etzioni, CEO, Allen Insititue for ARTIFICIAL INTELLIGENCE

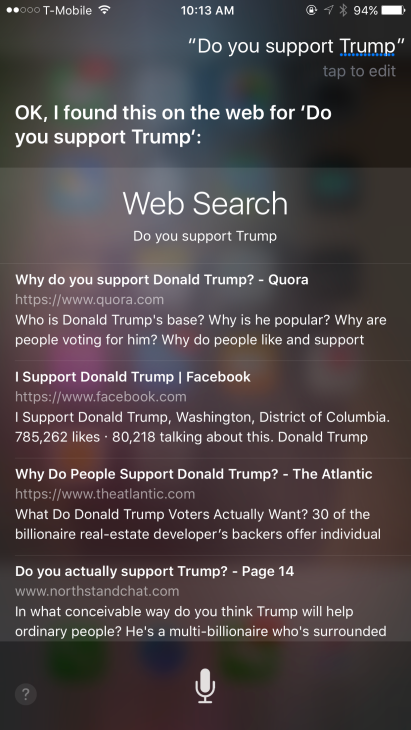

It is so normal to let one machine to have repeat function, even your phone. Tay was designed to have repeat function, which means as long as you use “repeat after me”, it would allow anybody to put words in the chatbot’ mouth. But, think about Siri of iPhone, why there is no public and huge joke for Siri? Siri will not tweet, yes. I asked my iPhone with a similar question “do you support Trump?” My phone showed me others’ opinion from the web instead of his own. Smart Siri. I clearly know the differences between Siri and Tay, Tay is a product of advanced AI which can conduct research on conversational research, while Siri’s function is to assist people better use their phone. Still, Microsoft had at least put some filters related to politics and racism to avoid such of things happen. Why not program the bot so it wouldn’t take a stance on sensitive topics?

Well, it was hard to handle this crisis, not only about social media or AI but also the nature of humankind. It needs both marketing and PR department to solve this kind of issue. The marketing department is responsible for promotion and takes good care of social media, meanwhile, PR handles the problems with presses, avoid worse press coming out, also faces the issue with the positive and sincere statement. There is not perfect AI machine, like the human. The fundamental problem we should solve it about the nature of people. If human really want to be better served by AI machine and they are eager to see changes, instead, try to change ourselves as human. AI machines are the mirrors of us, it is just a reflection fo who we are, be a nicer people. If Microsoft writes about this, it could a good article to clarify the crisis.

Blair said: Be a nicer people, you will see a nicer world. (Don’t forget to look amazing today)

Leave a comment